YouTube Implements New Capture Disclosure to Combat Deepfakes

In a world of increasingly fake content, generated by AI, edited via filters, or re-posted from other sources, this could be a significant step.

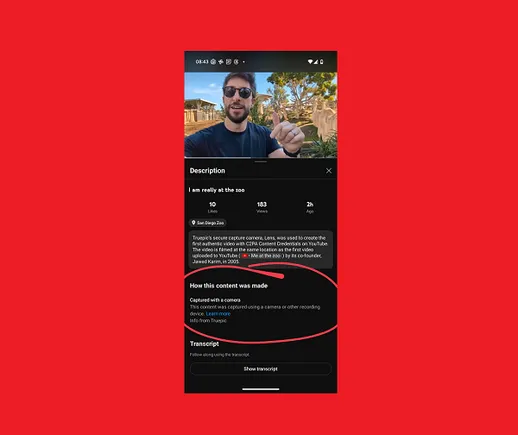

This week, YouTube has launched a new element that will show when a video has been uploaded via a device using C2PA standards, which reflects that the content is genuine and hasn’t been altered from its original form.

As you can see in this example, posted by Google’s C2PA product lead Sherif Hanna, the new info tags will explain how the content in the video has been recorded.

Google says that it will display this disclosure on content captured via certain cameras, software, or mobile apps which meet the standards set by the Coalition for Content Provenance and Authenticity (C2PA).

As per Google:

“For “captured with a camera” to appear in the expanded description, creators must use tools with built-in C2PA support (version 2.1 or higher) to capture their videos. This allows the tools to add special information, metadata, to the video file, confirming its authenticity. YouTube will relay the information that the content was “Captured with a camera,” and apply the disclosure when it detects this metadata. The content must also not have edits to sound or visuals. This disclosure indicates that the content was captured using a camera or other recording device with no edits to sounds or visuals.”

So when this label is applied, you’ll be able to trust that this is genuine, real footage, which will add an extra level of assurance to the upload.

Which is interesting, considering that Google is also one of several tech giants looking to push the use of AI, and the distribution of artificially generated content online. Indeed, just last week, Meta prompted users to share AI generated photos of the northern lights, while this week, Google-owned YouTube began testing AI generated responses to comments.

So the platforms themselves are actively pushing people to share more artificial content, and in some ways, the addition of C2PA tags runs counter to this, by acknowledging the potential harm of such.

But it may also serve an important purpose, especially in the case of world events, and deepfakes of incidents that could impact perceptions.

The tags will make it harder to fake footage of real events, because the data will show that what people are seeing is unaltered, and reflective of the actual event. Which could eventually become the standard for event coverage, and ensuring that we’re not being deceived by doctored video.

Which, of course, is increasingly important in a gen AI dominated world. So while it may seem to run counter to Google’s broader AI push, it actually does align with their overall efforts.

Either way, it’s another level of transparency, which isn’t perfect, and won’t catch out all deepfakes. But it’s a step forward, which will become more and more important over time.

Google has been working to implement C2PA standards over the past 8 months, after signing onto the project back in February. And now, we’re seeing the first elements in action.

It’s a good project, which could have increasing value over time.

Source link